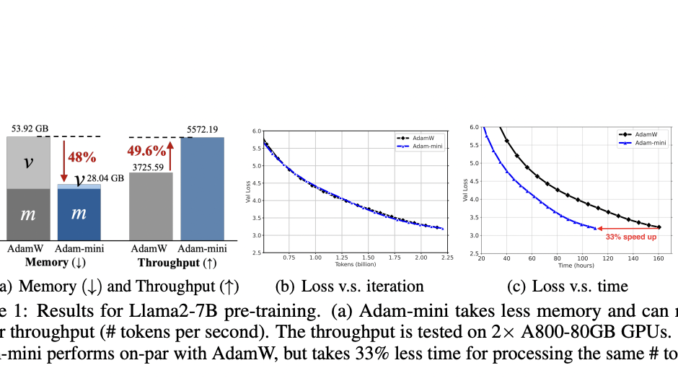

Adam-mini: A Memory-Efficient Optimizer Revolutionizing Large Language Model Training with Reduced Memory Usage and Enhanced Performance

The field of research focuses on optimizing algorithms for training large language models (LLMs), which are essential for understanding and generating human language. These models are critical for various applications, including natural language processing and […]