Optimizing Memory for Large-Scale NLP Models: A Look at MINI-SEQUENCE TRANSFORMER

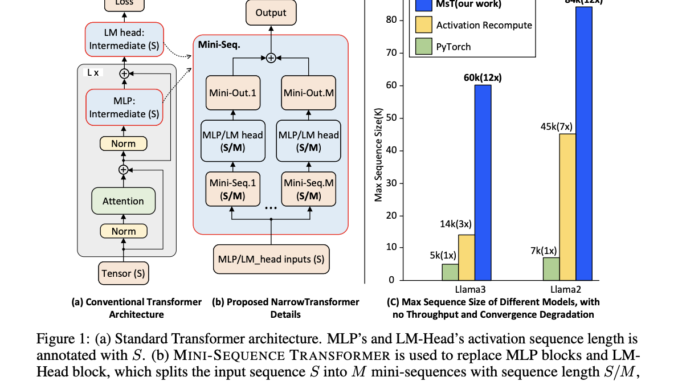

The evolution of Transformer models has revolutionized natural language processing (NLP) by significantly advancing model performance and capabilities. However, this rapid development has introduced substantial challenges, particularly regarding the memory requirements for training these large-scale […]